PREMIER Quality Assurance

Objectives

Quality assurance is intended to ensure that PREMIER's requirements as well as the own requirements that the organization / laboratory has set itself, are implemented and continuously improved.

Background

PREMIER is a conceptual framework of modules with minimum requirements that can be adapted to the needs and resources of any organization. An essential building block in PREMIER is quality assurance with its specific quality measures. Quality assurance in preclinical research is based on measurements and continuous improvements in order to assess the effectiveness of the applied quality measures.

Tasks / Actions

In order to create a lab specific action plan, the first step is an assessment, which will be carried out by the PREMIER team. The assessment will determine the status quo of the laboratory in regard to existing quality tools. Here you find the general tasks / actions that are necessary to implement the module.

PDCA Cycle

The foundation of quality assurance is measurement and continuous improvement in order to be able to assess the effectiveness of the quality measures you use. To maintain a high level of performance in research work, it is essential to react to changes in internal and external requirements and to create new solutions. Foundation is Deming's PDCA cycle (Plan-Do-Check-Act) which is the most tested and accepted base for continuous improvement on a wide range of organizations including clinical research. This makes PREMIER comparable to other Quality Management Systems.

Key Performance Indicators

The success of research is complex and difficult to measure; therefore, only indirect methods can be used to approach the topic. One possibility is to use key performance indicators. Indicators are specific measures or quality criteria defined to measure achievable milestones or to enable clear, valid conclusions about specific areas. The organization or laboratory has to define its own key performance indicators for the working environment. To be effective they need to be collected and analyzed at regular intervals (usually yearly) or when needed. All key performance indicators should be monitored, i.e. they are modified and adjusted accordingly to the requirements of the research work. This makes it possible to identify developments and trends in the core areas and take appropriate countermeasures if necessary.

A laboratory should define its specific key performance indicators depending on the field of research, which are collected for example in a table by a dedicated person and evaluated by the management. Some sample are listed below:

- number of open access publications

- number of registered reports

- number of studies clearly documented in laboratory notebook

- the raising of funds

- the number of used ORCID IDs

- the number of entries in an electronic laboratory notebook

- the number of master and doctoral theses completed

- the number of participants in mandatory training courses etc.

The results should be discussed with all project leaders and involved employees and, if necessary, countermeasures should be taken to counteract a negative development.

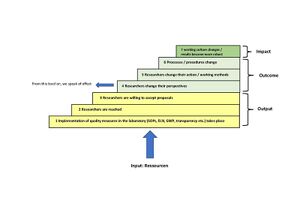

Impact Analysis

A second method of indirectly measuring quality is impact analysis. This is about whether and to what extent effects have been achieved with specific measures. The impact analysis asks four questions:

- How many resources flow into the project? Input

- What results / services does the project deliver and what is achieved? Output

- What changes does this bring about for researchers and to what extent? Outcome

- To which developments in research does the project / results contribute? Impact

Chain of effects: INPUT -> OUTPUT -> OUTCOME -> IMPACT

Impact analysis means the recording, investigation and evaluation of all expected and unexpected effects of a project. It thus enables active guidance of the project.

It allows:

- react promptly to unwanted changes / results and

- make more precise conclusions about which changes in the project lead to which consequences.

Monitoring and Evaluation

Two instruments of impact analysis are monitoring and evaluation. Both follow different questions and objectives (see table).

| Monitoring | Evaluation | |

| What do you like to know? | What happens in the project? | Why does something happen in what quality and with what consequences? (impacts) |

| Why? |

|

|

| Who? | internal, employees working on this project | internal and external |

| When? | continuously, during the entire project | during the project, at the end of the project or sometime after the end of the project |

| For which stage in the impact logic is this important? | Focus on inputs and outputs and easily identifiable effects (outcomes) | Focus on impacts (outcome and impact) |

Monitoring

Monitoring means collecting information on a regular basis to observe the progress of the project and to check whether quality standards are being met.

- Monitoring is suitable for documenting inputs, outputs and easy-to-understand impacts.

- Systematically carried out, monitoring also allows statements to be made later on about the entire project.

- With the help of the data obtained in monitoring, it can be determined that a project is not running as planned.

Evaluation

- An evaluation examines and assesses processes, results and impacts and can be -carried out at different times in the project.

- The focus is on outcomes and impact.

An evaluation can be used to determine why a project is not running as planned.

Internal Validation

The internal validation should help us to answer the following questions:

- Where do we stand? Where is our baseline?

- Are the introduced quality-measures being implemented?

- Have we taken the right measures (according to our requirements) or have we overstepped the target?

- Are our quality-measures effective?

- Are our milestones being achieved? (transparency, knowledge sharing, open access / open data / publishing null results, data storage, documentation, study design, statistical evaluation, randomization + blinding, pre-registration etc.).

To be able to answer the questions, various forms of internal validation (accompanying research), such as self-assessment and internal audits, could be carried out to answer the questions. Thereby:

- to determine the status quo of implemented measures,

- identifies the gaps / recognizes problems and

- processes / procedures / measures can be readjusted.

External Validation

The external validation are 3rd party assessments, i.e. audits, on-site visits or peer audits, which are carried out by external third parties. These can be auditors or cooperation partners who check specific processes for their feasibility and effectiveness. Here it is checked whether the laboratories with their processes and work flows meet their own requirements. A view from outside, from a neutral person, is often helpful against work blindness, which often arises after years in the laboratory.

Peer audits as a special form for external validation are a promising novel tool to solicit external feedback and foster professional exchange of ongoing projects at eye-level. They are very effective in fostering the improvement of methods or processes.

PREMIER recommend conducting at least one peer audit during a project's lifetime, especially if specific methodological challenges need to be solved and an exchange with colleagues is desired.

Auditing contributes to transparency. In general, and peer audits in particular, auditing might serve as fundamental processes of open and transparent science in the future.

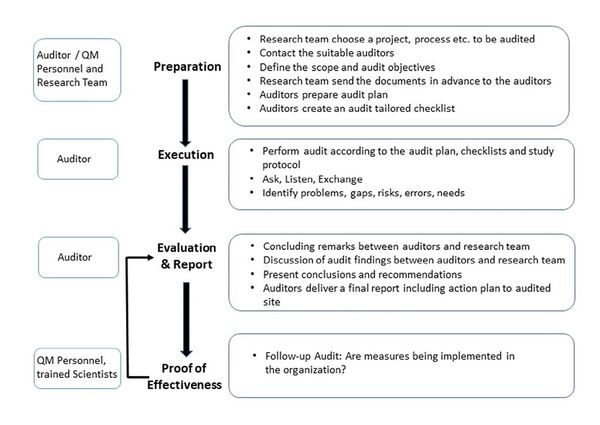

Audits

Internal and external audits are further quality assurance measures. They are part of the PDCA cycle (plan-do-check-act) at the "check" level. The measures identified in the audits are implemented and the continuous improvement process is set in motion ("act"). Audits in PREMIER meet the following requirements:

Not to focus on bureaucratic processes, but carry out content validation of the research processes and accepted by personnel at all levels in the organization. Based on our experience with various forms of audits, we recommend in PREMIER:

Error management with LabCIRS (Self-Assessment) (see Plos Biology) Internal audits in variable form (depending on need), such as method, document, project, process and data audits etc. Peer Audits as external audit (professional exchange, expertise at eye level). These forms of audits have shown to be effective in our setting and are accepted by scientists. (see Plos One)

Audits carried out, run through a predefined workflow.

Auditworkflow

The results of the audits are qualitatively evaluated. At each final meeting of an audit you should ask the participating employees for feedback and document this in the audit reports. On the basis of these reports, the follow-up audits can be further developed and improved accordingly.

A checklist for carrying out audits and a template for the audit plan can be found here.

Risk Assessment

In PREMIER, risk assessment takes place indirectly at various levels:

- with the help of specific, very focused audits (internal and peer audits)

- with an anonymous error reporting system (LabCIRS)

- with specific key performance indicators that cover all research areas

- with accompanying research to monitor the status quo of measures introduced

- with a specific design of experiments, which considers risks in advance - before the start of the project - and tries to avoid them with targeted countermeasures: experimental design template

With the help of the listed measures, risks, errors and gaps are identified using various methods:

- identified

- analyzed

- rated

- reduced / avoided / solved

- monitored

- documented

In summary, all measures result in an overall view that allows developments, trends and risks to be identified and, if necessary, counteracted.

References

- Deming W E; Out of the crisis; Massachusetts Institute of Technology Press, 1986 Deming, W. Edwards (1993). The New Economics for Industry, Government, and Education. Boston, Ma: MIT Press. p. 132. ISBN 0262541165)

- Kurreck C, Castanos-Velez, Bernard R, Dirnagl U. PREMIER: Structured quality assurance from and for academic preclinical biomedicine. Pre-registration

- Dirnagl U, Kurreck C, Castaños-Vélez E, Bernard R. Quality management for academic laboratories: burden or boon? Professional quality management could be very beneficial for academic research but needs to overcome specific caveats. EMBO Rep. 2018 Nov;19(11). pii: e47143. doi: 10.15252/embr.201847143. Epub 2018 Oct 19. PubMed PMID: 30341068; EMBO

- Kurreck C, Castanos-Velez E., Freyer D., Blumenau S., Przesdzing I., Bernard R, Dirnagl U.; Improving quality of preclinical academic research through auditing: A feasibility study. PLOS ONE

- Dirnagl U, Przesdzing I, Kurreck C, Major S. A Laboratory Critical Incident and Error Reporting System for Experimental Biomedicine. PLoS Biol. 2016;14(12): e2000705. Epub 2016/12/03. doi: 10.1371/journal.pbio.2000705. PubMed PMID: 27906976; Plos Biology

- Bewertung qualitativer Forschung - Springer Link

- Discuss at PREMIER forum